A Step in the Right Direction for NLP

It was a delight to read Emily Bender’s post Putting the Linguistics in Computational Linguistics this week: https://naacl2018.wordpress.com/2017/12/19/putting-the-linguistics-in-computational-linguistics/.

Bender’s advice on how to bring better data practices into NLP is to: Know Your Data; Describe Your Data Honestly; Focus on Linguistic Structure at Least Some of the Time; and Do Error Analysis. I appreciate her link to my article on this site about languages at the ACL conference in 2015 (Languages at ACL this year), where I showed a shocking bias towards English.

This week, it was great to see a new data set that will help us push the boundaries. While still only in English, it tackles a new dimension, Toxic Comment classification. It is posted as a Kaggle competition, meaning that it is an open source data set that anyone can run experiments on and compare results, competing for the most accurate results: Toxic Comment Classification Challenge.

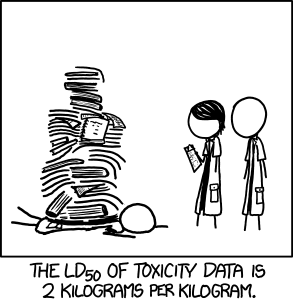

With 100,000+ comments in total, it is one of the larger data sets available for NLP. By tackling toxic comments on Wikipedia, they are hoping to enable people to build Machine Learning systems that can more broadly tackle hate speech, online harassment, cyberbullying and other negative online social behaviors. It’s important area to study, because toxic communities tend to drive out diversity in their members. Unlike real-world toxicity, where ‘toxicity’ is measured as a physical effect on someone like LD50 – dose at which 50% of people will die – online toxicity is less well understood. How do you measure when someone stops participating in a community that is toxic for them, confirm the causal link, and measure additional adverse effects on their life outside of online communities? These are all questions that we can start to answer with this data set.

Source: XKCD https://xkcd.com/1260/

The data set was created by Google/Jigsaw, and authors created this data set using our software at CrowdFlower. They also sourced a few thousand contributors through CrowdFlower to annotate the data according to what kind of toxic comment (if any) was made. See the original paper describing the data collection at: Ex Machina: Personal Attacks Seen at Scale, by Ellery Wulczyn at the Wikimedia Foundation and Nithum Thain and Lucas Dixon at Google/Jigsaw.

This data set was recently highlighted by Christopher Phipps as not conforming to Bender’s criteria: http://thelousylinguist.blogspot.co.il/2017/12/putting-linguistics-into-kaggle.html

I agree with most of his observations, but I am more optimistic about the data set and how those problems can be addressed, or even highlighted, by the data as it already exists.

One particular problem that Phipps highlighted was on quality control for the annotation process. He wrote:

“little IAA was done. Rather, the authors … used a set of 10 questions that they devised as test questions. Raters who had below 70% agreement on those ten were excluded”

Update: I was was writing this, Phipps removed this passage from his post after I pointed out the inaccuracy via Twitter. Thanks Christopher! I’ll keep this below in case other people are confused by the quality control method used.

This observation is correct but incomplete. In the article they report that in addition to 10 initial quiz questions, 10% of all remaining questions were also ones with ‘known’ answers from which they could track the accuracy of each contributor. From their original paper:

“Under the Crowdflower system, additional test questions are randomly interspersed with the genuine crowdsourcing task (at a rate of 10%) in order to maintain response quality throughout the task.”

So, they would have had 100’s or 1000’s of questions with known “gold” answers for evaluating quality, not 10. The annotators would only been allowed to continue working so long as they were accurately answering the 10% known questions, and there results included so long as they maintained accuracy across those questions throughout their work. This is standard for the CrowdFlower platform. They had 10 people rate each piece of text, which is more than what we typically see: 3 to 5 is more common. At this level of quality control, the probability of deliberately bad or misinterpreted results is very low (less 1 in a million), so the data annotation should be clean in the sense of being genuine. The authors also trialled and refined how the questions were asked in multiple iterations, before arriving at the annotation design that they launched for the 100,000+ comments that they ultimately published.

Tracking agreement with test questions is separate from Inter-annotator agreement (IAA), which looks at how much different people agreed with each other. The authors use Krippendorf’s alpha for IAA and found 0.45, which is good agreement. I think this is fine for IAA on a task like this. There are some tasks where Krippendorf’s alpha isn’t the best choice, but for a small set of questions where there were a large number of responses per human annotator, like here, it is reliable.

There are two other criticisms from Phipps which I think have deeper implications, but also have solutions: the bias in the demographics of the annotators and the bias in the demographics of the Wikipedia editors.

I believe that both can be addressed by looking at the data, and that this will be inherently interesting.

First, Phipps notes that the annotators were biased towards men (65%) and non-native English speakers. It’s possible this influenced their judgments. However, this is easily testable: the data is there to compare the annotations based on gender, age, education, and first language. If we have access to the gold/known questions, we can see if annotators from any demographic were more or less accurate.

Even without the gold/known answers information, we can look at the distributions of answers from different demographics, and see if there really are differences in response that correlate with each demographic.

Finally, we can look at the agreement on a per comment basis. We could test, for example, whether women annotators tend to agree with other women more than they agree with men (or vice versa) showing correlation bias from annotators based on gender. I don’t have intuitions about whether the demographics of annotators will correlate with their interpretations, so I’m curious to see the results! (Friendly callout: Christopher Phipps, perhaps you could run this analysis as penance for the IAA comment? ;-) )

If there are biases that correlate with annotator demographics, then in many cases they can be adjusted for. For example, if there are different responses according to gender, the data can be re-aggregated so that of the 10 judgments for each comment, an equal number of people identifying as men and women are chosen, rather than the default of 65% men. This doesn’t help with people who identified as neither men or women, as they were not represented in large enough numbers to rebalance the data in this way, but it would address any man/woman bias. The same is true for any unbalanced demographics: provided that there are a large enough number of the underrepresented demographics across all the data, we can adjust for it.

On the second more complicated problem, Phipps notes that Wikipedia contributors are a non-diverse group of people, very over-represented by white males. This is a more serious limitation of the data set. To the extent that demographics can be tracked on Wikipedia, this could also be interesting. This new data set will allow us to track which people on Wikipedia are specifically targeted with toxic comments. We can therefore track how many of them continued to be editors and how many dropped out, and see if non-white males are more likely to drop out. This could help explain why the community is already so non-diverse. So, it would be interesting from a sociological perspective to investigate this data set and the impact on those involved.

The remaining problem is that any Machine Learning model created on the data might be capable of only accurately identifying toxicity by white males. This is a valid concern and I hope there are evaluation data sets being produced that can test how true this is.

I know that the authors of the data set look at *many* different data sources in their work, so I presume the decision to release data only from Wikipedia was primarily driven by Wikipedia already being open data. I don’t work with this team at Google/Jigsaw at CrowdFlower on a regular basis, but when I last spoke to them it was to give advice on reducing bias in Machine Learning models that could come from biased data. So, from what I’ve seen, I know they are thinking about broader problems than this data set encompasses, and I’m glad that they have open sourced one data set so far!

All the best to everyone entering the Kaggle competition! In addition to building great algorithms, I hope you have the chance for some linguistic analysis, too

Robert Munro

December 2017